by Kent Lyons, May '22

In my last article, I discuss how one might consider the overall pipeline of a research idea from inception to eventual consumer of the research. After the research is "done" what happens to it and how can we work backwards from the indented consumption to help bias possible success? In this article I extend that line of thought using Christensen's Theory of Disruption. In this theory, two different types of innovation and two different kinds of disruption are articulated. Here we reflect on these trajectories to see how research might be adopted in these different contexts.

Clay Christensen was a professor at the Harvard Business School and through his research and writings, popularized the term "disruption". As is often the case, when a common language term is used to describe a more precise technical concept, it is very easy for that word to be reappropriated, and this is very much the case with "disruption". So here I'll use Christensen's Theory instead to try and be more precise with meaning and terminology.

While the notion of disruption has been turned into a silicon valley meme, the ideas behind Christensen's work started with his Ph.D. dissertation. That research matured and spread more widely with his books "The Innovator's Dilemma" and "The Innovator's Solution". Here I will cover some of the main topics, especially as they relate to product development and add my own twist on how the commercialization of research fits into that framework. For a better background in how businesses work, what it means to make a product better, and business life cycles, I highly recommend you read these two books in particular.

Christensen was interested in the question as to why highly successful companies with great businesses would almost overnight fail and be replaced with different companies. This is the origin of the idea of "disruption". The incumbents were disrupted by a newcomer. His original research was in the computing industry, with hard drive manufacturers in particular. As a result I find his work particularly poignant being in computing myself and also having lived through some of this history. I did an internship at the DEC Western Research Lab just as it was acquired by Compaq. Those who know computing history should recognize both Digital and Compaq, but they are now very long gone. The workstations that were so powerful when I started college from Sun and SGI suffered similar fates.

As the computing paradigm shifted from mini computers, to workstations, to desktops, and now to mobile, the companies that were dominant in each of those eras were replaced by a new set of companies. Apple managed to all but kill Nokia, Motorola and RIM with its Blackberry in just a few short years. And it is not that Nokia and the others didn't understand the threat of Apple (I was working at Nokia at the time). While they dismissed the iPhone at first, once it took off, there were tremendous resources devoted to try and prevent losing their dominant position in the market. Nokia failed. Motorola failed. And RIM failed. Just like SGI and Sun did before, and DEC before that. And to paraphrase one of Christensen's comments - it's not like the CEO's of these highly successful and praised companies woke up one day stupid. There was something else at play. Christensen makes a strong argument that the problems were really not technical ones, even though each of these shifts saw tremendous technical changes. Instead they are organizational challenges both within the company itself as well as in the supply chain they are situated within.

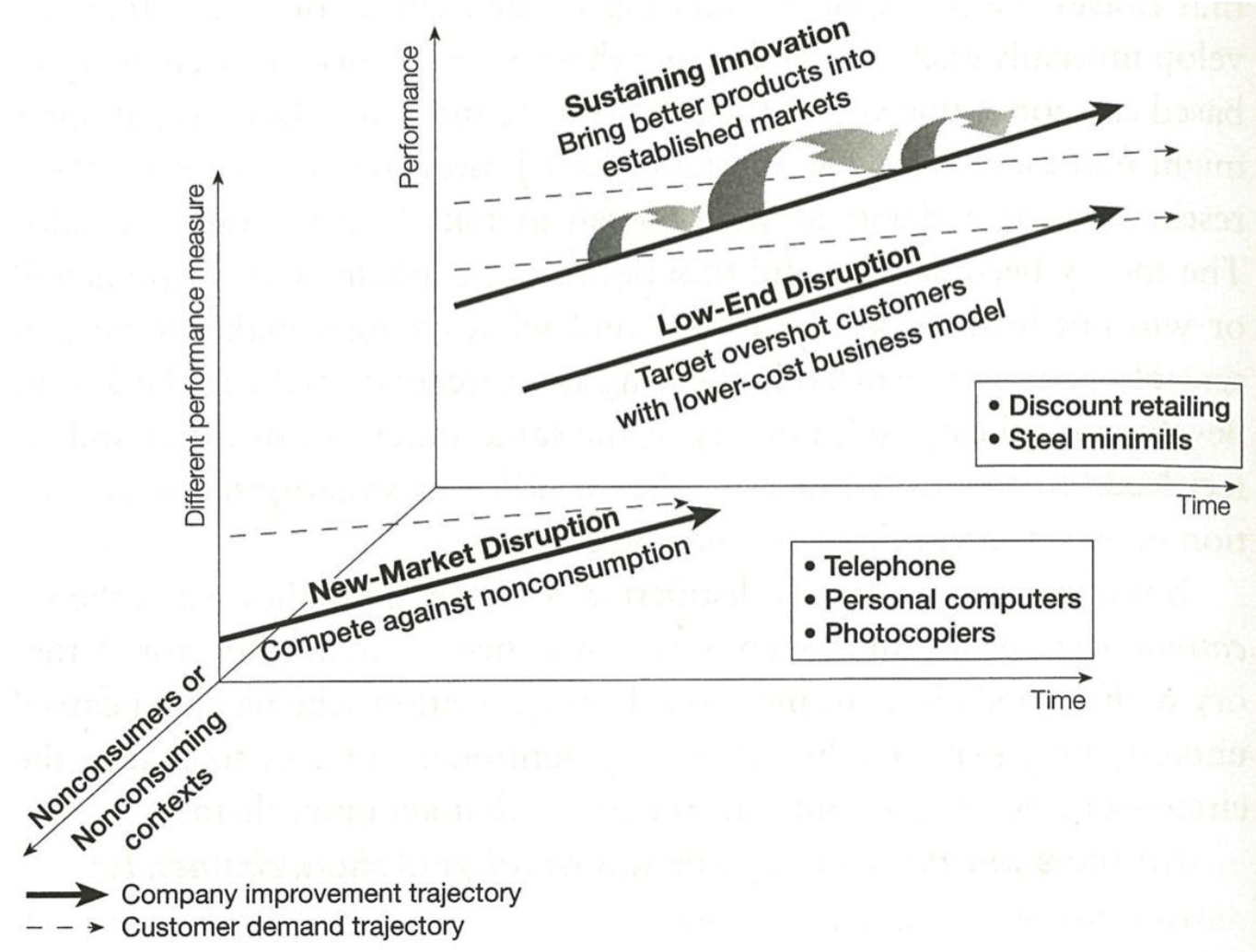

In the Innovator's Solution, Christensen puts forth the following model and articulates two kinds of innovation and two kinds of disruption. The iPhone fits in as a new market disruption.

Most of the time a company is working on "Sustaining Innovation". They are incrementally improving their products. This might be adding a new feature, fixing bugs in software, etc, etc. In this case, there is a relatively clear understanding of "performance" - what problem is the product solving and what does it mean to make it better. As an example, for decades, CPU performance meant clock frequency, MHz and then GHz. Clock performance was dominated by the size of transistors. And as transistors shrunk, they could go faster and more could be added to a die. This was Moore's Law (which, as a side note, was not a law of nature but instead a challenge to the engineers at Intel).

Many of those improvements could be made incrementally. Once a new version is made, the steps to make the next version were usually rather self evident. They may take a lot of engineering and other resources, but the path was rather clear. Occasionally to continue on the path of improved performance, more substantial changes needed to be made. These are discontinuities in the technology, or in Christensen's model, Radical Sustaining Innovations. For CPUs a few different times, this has meant completely rethinking how to create transistors for the chip. In the early days this was different process technologies going from MOS to CMOS. Or more recently the shift to FinFET transistors. For these types of technology transitions, it becomes clear that the current line of incremental improvements is coming to an end, but there is still a strong demand for continued improvements. So a new technology is created. Often this can involve rather significant research, but the target, the notion of performance and what is better, does not change.

Another observation Christensen makes is that the desire for increased performance also goes up over time. With CPUs, as Intel and others made faster chips, customers found new uses for that speed, and therefore induced demand for even faster CPUs. At any given time there is a distribution of needs in the market. There are customers that are happy with relatively low performance. These customers take the cheapest stuff and are usually low margin customers (because the products are cheap, there is not a lot of room for profit on these products). At the other end of the spectrum are a handful of customers that want as much performance as possible. They have significant needs and will pay whatever price to meet them. These are the high margin customers. A company loves high margin customers and works to capture more and more of them, producing higher performance products. With each new iteration of the product, as the technology improves, the company and its product slowly move upstream. Notably, the market as a whole also moves upstream. They find more uses for the performance offered. One of the key insights by Christensen is the rate of improved performance of the products made by the company, and the rate of increased performance desired by the customers, do not match. Due to the nature of technical innovations, the company often creates products that move to the top of the performance band faster than the market and customers' demand for that performance increases.

This observation leads to Christensen's first form of disruption - "Low End Disruption". How do you displace an incumbent? The answer is to make something cheaper and worse! Cheaper makes sense intuitively. The less expensive something is, the more people that can buy it. But worse? Why intentionally make something worse? This approach seems very counter intuitive.

The answer lies with the aforementioned increases in performance. If a new entrant comes in to try and make something cheaper and better than the incumbent, they are a direct threat to that incumbent. The incumbent will mobilize its resources to fight off the threat (just like it spends significant resources trying to defend or take business from its current peers). The big, established company will almost certainly win.

What if instead, the new entrant made a product at the low end of the market (cheaper and worse), and figured out all of the business processes to sell it profitably. Now things are different. The incumbent is probably happy to get rid of its low margin customers. That frees up resources to go after the bigger money with the high margin customers. And because the new entrant is working with the low margin customers, to be profitable, it needs to be doing business differently. It needs to be more efficient to make a profit. This likely involves rather significant differences in the business model and supply chain (how it procures its inputs and sells its outputs). This is how things start, but we need to consider the dynamics as well.

Over time, the new entrant on the low end makes incremental sustaining innovations, slowly increasing the performance of the product (while keeping the operational efficiencies they started with). They are able to capture more and more of the market as they move towards the middle of the performance demand. The inclination of the incumbent is to "retreat up market" as Christensen puts it. They can see they're losing market share at the bottom, and might be ok with that. And if they are not, the easiest way to make up for the losses is to go after more of the higher margin customers. However eventually, the incumbent's product shoots out the top end of the market. With ultra high end products, even though the amount each customer is willing to pay is high, the total number, and therefore size of the market, gets smaller and smaller. The incumbent dies as it has no market to sell to, and the disruptor now owns the market.

That is how a low end disruptions works. The disruptor starts in an undesirable part of the market (the bottom) and figures out how to be profitable there. The incumbent ignores the new entrant because they don't perceive them as a threat. With the cheaper and worse product, they're "only" taking out the low end, not valuable to the incumbent, part of the market. Over time as technology improves, the newcomer gradually increase performance and moves up market displacing more and more of the incumbent's business. The incumbent also continues to increase performance, but to the point where the market is too small to support the business. And the incumbent cannot compete with the newcomer because it's cost structures are fundamentally different and in particular more expensive.

So that is the theory, what does this look like in practice? Well, what happened to SGI and its high end graphics machines? PCs were horrible for graphics in comparison. However they were much much cheaper and slowly got better over time. Eventually it made sense to buy a cluster of PCs and turn them into a render farm instead of paying the premium for an SGI workstation. The same thing is happening with the CPU industry between Intel and ARM. ARM for a long time was the cheaper and worse option relative to Intel. But generation after generation, ARM processors got better. Intel's response was to not worry about these low end CPUs too much and instead focus on the server market where Intel's performance could shine and the customers would pay a premium. (Intel did try to gain traction in the mobile space several times but never really succeeded.) Fast forward to today and Apple's ARM based CPUs in its newest laptops, which grew out of Apple's phone CPUs, are arguably better than Intel's offerings. And we are now seeing more and more ARM in the cloud where Intel has dominated so far. Can Intel hold on? Or will ARM win out. Based upon Christensen's Theory, it would seem that it is only a matter of time before ARM wins out there as well. The cheaper and worse got better and better. Yet it still remained cheaper relative to the incumbent as the business started lean and much more efficient.

There is another kind of disruption in Christensen's theory and that is the "New Market Disruption". Here the new entrant changes the rules of the game and the incumbent can't follow suit. The iPhone was a new market disruption. It is getting hard to remember, but the high end phones of the day when the iPhone was released were dominated by business and productivity usage (anyone remember PIM?). The Blackberry was king of mobile email for example. And when the iPhone was released, it was, by pretty much by all measures of the day, a bad productivity phone. The first iPhone was 2.5G when 3G was already widely deployed. All contemporary phones had replaceable batteries so that power users could swap out batteries mid day. The iPhone battery was built in. There was no physical keyboard - Nokia supposedly had user studies showing that phones needed a keypad. And the original iPhone didn't even have the app store yet (not that anyone else did either, but it was not the reason the iPhone got traction as it didn't yet exist).

By looking at the values of the market at that time, the iPhone was bad on pretty much all fronts. Yet none of that mattered. The iPhone was playing a different game. Between the polished design and capacitive touch screen interface, the iPhone was targeting a different, broader market.

Job's also did something very unusual - he negotiated with AT&T so that the phone could be bought directly from Apple. This was a significant shift in the cell phone distribution channel. For a counter example, while Nokia was an early adopter of user centered design and thought a lot about the end user. However, the end user was not Nokia's customer, AT&T was. Nokia sold its phones to AT&T and then AT&T sold them to end users with service. If AT&T didn't want the phone, then it didn't matter what the end customer may or may not want. (We found this out the hard way with some of our work at Nokia).

So all together, Apple went after a new market, one that had different notions of performance relative to the incumbents. Apple rapidly iterated, and then eventually with the app store allowed for a tremendous change in the capabilities and functionality of mobile devices. Apple also thought about its supply chain and distribution channels in new ways. By the time the incumbents realized the market had shifted, they were unable to adapt and compete. They were left behind playing the old game and at a very fundamental level not able to understand or play the new one.

Another part of Christensen's work I want to point out is around business processes and supply chains, which I briefly touched upon with this iPhone example. A company does not operate in a vacuum. There are other companies that supply inputs for the product. And the product itself is sold through some sort of sales channel. There is a whole ecosystem that gets developed around successful products and businesses. Intel doesn't sell computers, it sells the chips to PC and laptop manufacturers who produce the computers. Those go to distributors, and finally to retail outlets. Car manufactures don't make all of the components for a car. They rely upon the tier 1 suppliers to provide many of the major components. And with the exception of Tesla, car manufacturers also don't sell cars to the end consumer. Instead they are sold to dealerships which then interface with the customer.

For sustaining innovations, many of the improvements can happen in more isolated ways in this value chain. At each step, the notions of performance are relatively stable and the company knows how to make things better for its customer. For disruptions, this ecosystem is upset. The low-end disruption might cut out whole chunks or reshape the value chain in an effort to make it more efficient (the company needs to make money with slimmer margins and this is one way to do it). For new market disruptions, the product by definition is new as perceived by the market, so likely new supply chains and marketing and sales channels need to be created. This is Apple selling its phones directly to the customer and using AT&T only for cell service. Or Tesla bypassing the dealers selling directly to customers and with that also upending things like how service of the vehicles happens with the mobile service fleet.

So that's all great. But what does it have to do with research and productization of that research? Using Christensen's Theory, we can examine where a company is, and what types of innovation it needs or can use. Most of the time, most companies are in the sustaining innovation mode. They are working to incrementally improve their product. Is research needed here? Arguably no. There may be new things that need to be learned or created, so they might be research in that sense. But that is likely not "Research" with a capital "R". What is learned or made likely isn't moving the state of humanity's knowledge forward in a significant way. Returning to use inspired basic research, most likely what is needed is Edison not Pasteur. Are the results of the work just relevant to the current product or can they be generalized to other domains? Maybe with the right creative approach they can be generalized, but the default answer is probably not. The employees of the company all have a pretty good sense of what is needed to make the current product "better". And that better has tons of low hanging fruit like "don't crash". There's also an approximately infinitely long list of tickets that have already been filed in Jira if anyone in the org is out of ideas for improvements.

So "Research" is likely not needed for incremental sustaining innovations. What about radical sustaining innovations? Here I think the answer is maybe. Some of these radical innovations might "just" require implementation. I completely replaced the speech recognition system for Tesla. Before I started my work, you could only speak a handful of commands. The lack of good speech rec was often complained about on forums and the like. So I went about replacing it with state of the art technology. Under the "hood", this involved swapping out and upgrading a lot of boring technical bits. It also involved creating a new architecture to allow speech control of (ideally) all of the UI elements, and on the speech rec and NLP side, using fundamentally different (newer) technology. I didn't write my own speech or NLP system, but instead plumbed everything together to allow significantly enhanced capabilities. For all of the things on the main Tesla UI screen (and a handful buried deeper like HVAC, mirrors and the glove box), if you could poke at it on screen, after my work, you could also speak to it. I didn't change any notions of performance, what it meant to be speech operated was more or less the same. Yet the number of things one could control and the ability to detect speech and properly respond to it was significantly improved. Similarly, the technology needed to perform this had not existed only a handful of years before and was very different that what was currently used. My guess is that there are a lot of radical sustaining innovations more or less in this camp as well. How do we take what is known and apply it to a given problem? A lot of it comes down to execution and implementation details. As Gibson put it, "The future is already here. It's just not evenly distributed yet." Having said that, there are definitely things that might not happen without even more significant research.

A different example is autonomous driving. If we look at autonomous driving as a sustaining innovation (and it might instead be a new market disruption), then it can be seen as an extension of ADAS (advanced driver assistance systems). To help improve safety on the roads, we have transitioned from early technology like anti-lock brakes to radar based collision warnings. An extension of this is to use autonomous driving technologies to prevent even more accidents and take over from a driver that might otherwise be in imminent danger themselves or to others. The underlying technologies to do this (AI for perception, planning and control) are rather different from what is needed for current ADAS systems. Or in other words, it is unlikely you can take an ADAS system and incrementally improve it to become an autonomous driving system. But from a market perspective, if the fundamental dimension of performance is something around safety, then these types of autonomous driving technologies would be a radical sustaining innovation. The notion of performance and what it means to be better (say reducing crashes) remains the same, yet the technology is fundamentally different. It is possible that the electrification of vehicles, replacing internal combustion engines (ICE) with electric motors and batteries (BEVs) might also be a radical sustaining innovation. The nature of the technologies are significantly different than the current ones. But if the market's notion of vehicle performance remains the same, this would also be a radical sustaining innovation. From what I can tell, having been in this industry for a while, the legacy car manufacturers are treating these technologies as sustaining innovations. Time will tell if that is the case, or if they are instead disruptive technologies. Either way, getting back to the ideas in my previous article, a use inspired basic research approach could work here. The use, what it means to be better, is well understood. How to get there might be an open problem and the research can be used to fill the gap. However if while conducting that research, the results provide an answer that solves a different performance metric (even if closely aligned), it likely isn't a sustaining innovation, and could be destined for adoption challenges.

When I was at Intel Research, the chatter for a time was to conduct "off road map research". That sounded awesome as a junior industrial researcher. I interpreted that broadly as - go do new stuff the rest of Intel isn't doing. After a while (I think after I left Intel and got some more perspective) I realized that the engineers, PMs, VPs, etc. in charge of those road maps could plan in a straight line basically forever. Their job is to think deeply and execute on those sustaining innovations. So if off road map isn't off the end, what is it? Off to the side perhaps? If so, where might those research results go? How do they intersect with Christensen's Theory?

If they're not a sustaining innovation (eg not aligned with the current road map), then it seems we're left with one of the two disruptions. Is it a low-end disruption? These might be enabled by new technologies, but what needs to be paired with them is an organization that is leaner and more cost efficient than the incumbent. And if that work came out of the incumbent's corporate research, then it's really hard to be leaner than yourself! So instead, this path would require spinning up a new organization that is sufficiently decoupled from the parent so it can do things in a new leaner way. The other problem is if the technology is in the same business space, then this spin out is now a direct competitor to the main business. If it's going down the cheaper worse path, this might be viable in theory. But companies tend to be very adverse to cannibalizing themselves (even if it is inevitable in the marketplace). At one interview I did years ago, there was a concern about the big old I was interviewing at turning into FoxConn (the company responsible for much of Apple's manufacturing). They didn't want to be just a manufacturer to someone else that owned the valuable part of the supply chain. The first time this came up, I didn't have much of a response. By the end of the day, after I'd thought about it more and the comment was made in a few different ways, I asked: "What if FoxConn owned Apple?" I got a rather odd look in response - big companies don't think that way!

Ok, so it is probably not a low-end disruption. What about a new market disruption? Again, on paper this sounds like a viable path. Can the research be used to create some sort of new business? Possibly, but there are of course challenges. For example, does the new market actually exist? It currently is unpopulated and is that because it's a bad idea to go there? Or is it because it hasn't been tried yet? Unfortunately big companies tend to be really bad at starting these kinds of businesses. First, most don't know how to start new business at all. They instead are really in the business of keeping their current BUs going. But ignoring that, the new business is often kept too close to the parent company so all of the existing org's legacy processes are carried over. For the current business, those processes work. But why should they be used for the new one? Are they appropriate (probably not)? One key one is the sales channel. As I alluded to above, at Nokia we prototyped some cool new stuff that might have fit the new market category (the need in the market was yet to be proven though). However it didn't get that far. Nokia's customer was AT&T. And AT&T bought smartphones. We built something that was not a smartphone, and the business unit had no idea how (or desire to) sell the new thing we built to AT&T. And they were not empowered (or did not know how) to build a new sales and distribution channel. So it was dead. And this was despite high visibility and support by senior leadership in the company. There was no effort parallel to the research to create the needed new channels for the possible new product.

Another common failing in creating new products is not enough patience to grow. The big legacy company might want to start something new, but the motivation is often driven by a need to move its financial numbers. And since the company is already successful, moving those numbers means the new product needs to be rather successful and fast. There isn't the patience to plant a bunch of seeds and let them grow (and accept the risk of many never germinating fully). They want the next Facebook or Apple today! I'm aware of very few businesses that hit $1B after only a year or two. Most take lots of time and organic growth to get there, and many never do. (Big companies can be spoiled though and do occasionally have this capability or move into adjacent business through M&A). The plant seeds and grow strategy is instead the game of startups and VCs. They try to find a product market fit with something new. A few will work, but many never really take off for many many reasons. I'm not the first to make this observation and it seems places like Google X are setup to play this game differently. However from the outside the need for big wins still seems to be a strong bias in the projects they take on. It's not clear how knowable that really is at the onset of work. Did Facebook or Google know what they would turn into? The founders had this hope. What about the creators of My Space or Alta Vista?

Another related point Christensen makes in his books is that big companies are finely tuned machines to crank the handle of the current product and market. They've found something that works and they want to extract as much value out of that successful business as possible. Anything besides doing that is a distraction from extracting that value. As such, the companies put into place a lot of incentives to make the business operate as efficiently as possible. They have sales processes that work and reward them. They have engineering practices that work and reward them. Doing new things, unless there is an obvious way to show incremental results for the existing working business, is very hard, and mostly on purpose. That also means that companies build an immune system against new ideas. Most of the time those new ideas are a distraction. This is one of the uphill battles that often needs to be fought with research and research results, especially if the research points to a new/different way of doing things. Why do that new thing when the current thing is (mostly) working? Who is the research really for? Who's life is it making better, and at what costs?

I've yet to personally encounter a research lab that also has resources to spin out successful results. Instead the focus is on "tech transfer" to the existing business. However in light of all of the above discussion, how many research results are really in line with sustaining innovations? And even if the researcher thinks there should be good alignment, is there really? Is the research missing some key details about the business that results in a more significant disconnect? Researchers are smart and great at telling their story. But they also often make a variety of simplifying assumptions to perform the research. Are those simplifying assumptions in line with the business? And to use an old adage, the customer is always right. In a research lab the customer is almost certainly the business unit and they have notions of what it means to make things better. Is the research really doing that? This is applying the same notions of performance used in Christensen's Theory only now the product and customers are internal. What does it mean to make the business process better? There are likely well developed notions here (even if tacit) and the research, in order to offer an incremental improvement, needs to be well aligned. If the research points in a different direction, say a possible new product, then the business unit is the wrong group to consume it. They are tasked with making the current product better. And if it is aligned, they already have a road map of new features and/or products. Why do they need the new researcher's one?

Putting all of this together, where are the opportunities for applying research in a product setting? The easiest path is to make the current thing better. But is that research? And what do we do with the other research results that point elsewhere? Or maybe the charter of the research org is to go find new opportunities the business units are not considering. In that case, who is going to consume them? The current BUs are more than busy already. So you need the resources to stand up something new around the results as well. Could a big company do this? I think so, but it would be challenging and require special support from executives and the ability to decouple from the main business. Both so the new venture doesn't get the hug of death, but also to be able to prove the new market or not (see Christensen's case study of the IBM PC as a positive example).

So with Inovo Studio, the idea is to confront these challenges directly. By doing applied research independent from a business unit, we're not trying to shoehorn results into an existing business or product. Instead, the research results that are promising are tested to check product market fit, and if that works, spun out into a company. From there, we can turn back to Christensen's theory. Once the research is market proven, maybe it is in line with a current companies key needs and the startup is acquired (this is a common exit strategy for many startups). We can also try the low end disruption path, or more likely given my own research interests and style, the new market disruption game.