by Kent Lyons, May '22

Previously I provided some perspective on CHI 22 as a conference experience. Here I provide an overview of some of the research content itself. Many of these papers I saw in person. A few I took advantage of the prerecord youtube talks. You can find these papers in the program. I'm guessing the youtube videos/talks will stay up indefinitely. The papers are free to access for now, but eventually ACM's paywall will kick in.

These are not in any particular order, but you can see my bias in CHI - cool new software and/or hardware systems with a few extras thrown in to spice things up.

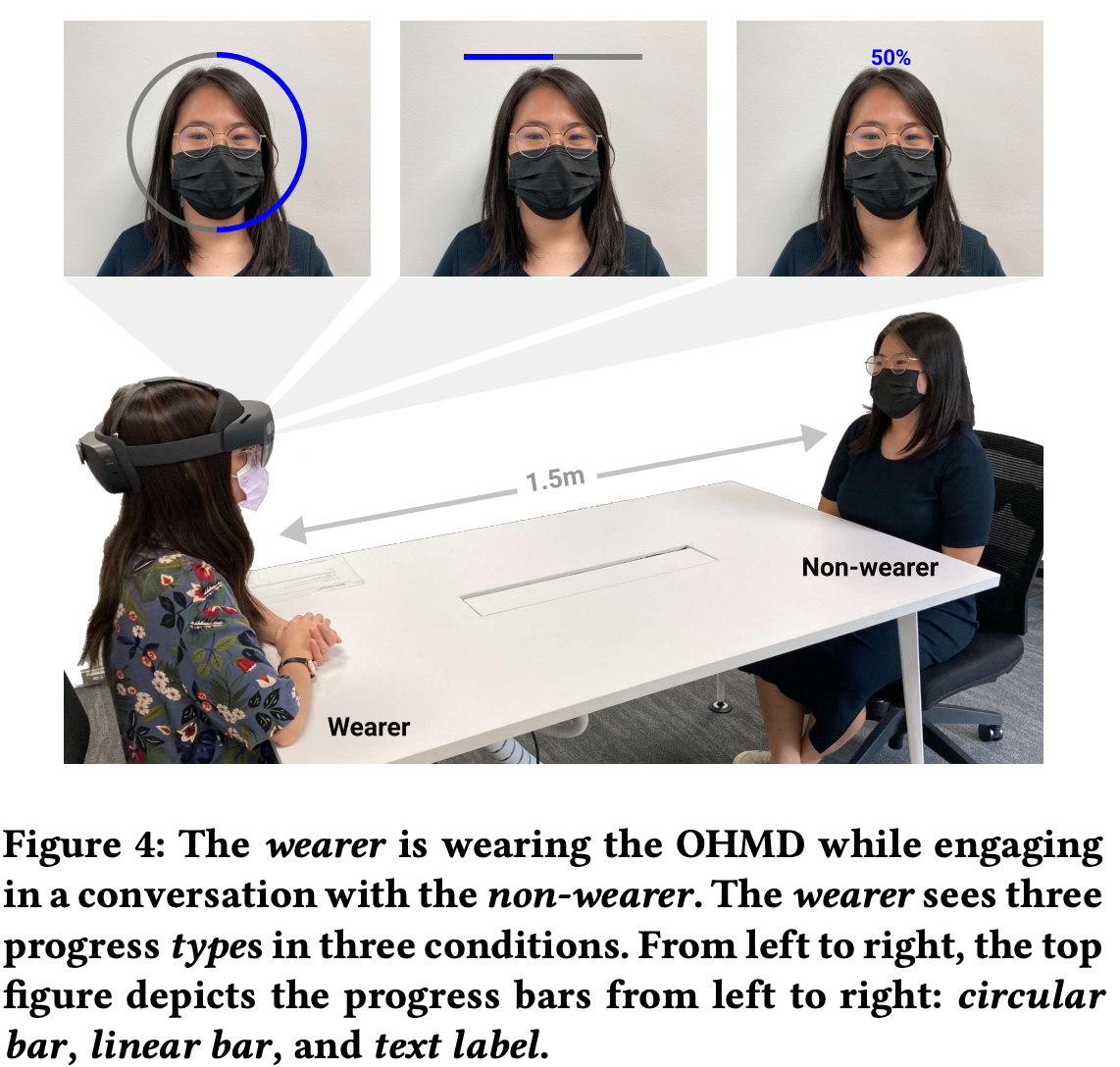

Paracentral and near-peripheral visualizations: Towards attention-maintaining secondary information presentation on OHMDs during in-person social interactions

Investigates placing information in "near periphery vision" while using a see through HMD during a conversation. In particular they explore placing different kinds of visualizations around the conversational partner's face.

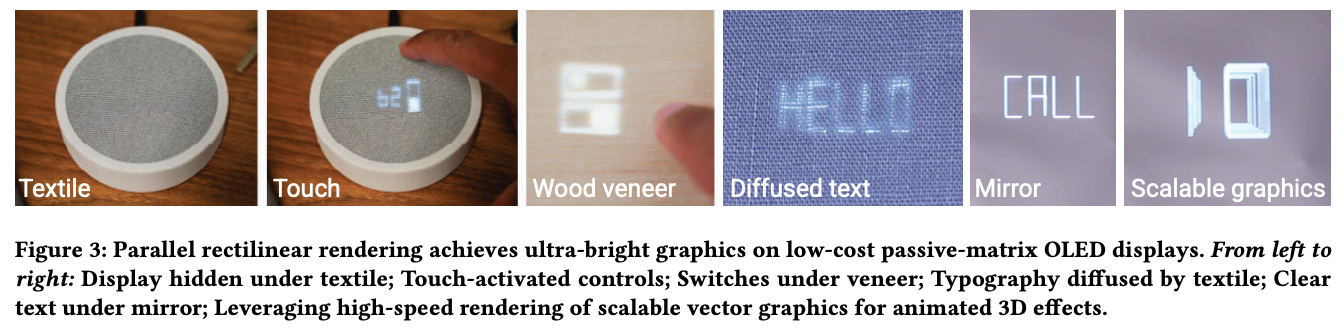

Hidden Interfaces for Ambient Computing: Enabling Interaction in Everyday Materials through High-brightness Visuals on Low-cost Matrix Displays

This work explores using a display under the surface of another material. The display light shines through the material and is only visble while on. This reminds me of our work on shimmering smartwatches, trying to make a watch that looked more like a watch, yet retain some smart capabilities. The key contribution of this work is in how they drive the display bright enough to show through the covering material

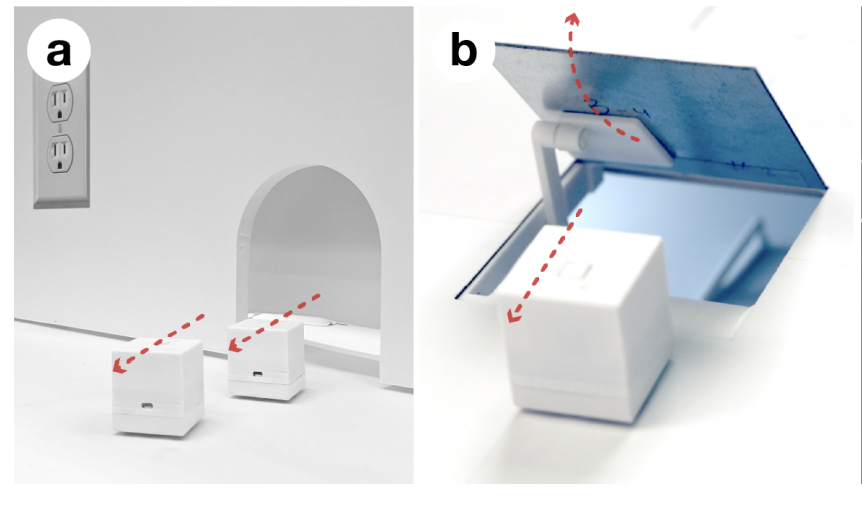

(Dis)Appearables: A Concept and Method for Actuated Tangible UIs to Appear and Disappear based on Stages

This work extends a series of work exploring small tabletop robots. The contribution of this paper is a stage (from theater) analogy. There are front of stage areas where the robots are visible, and back stage areas where the robots can disappear to or appear from - like an actor going on and off stage. The back stage area can serve to hide the robot, but is also an area where they can undergo transformations out of site.

What might this be used for? I'm not really sure but it is very cool!

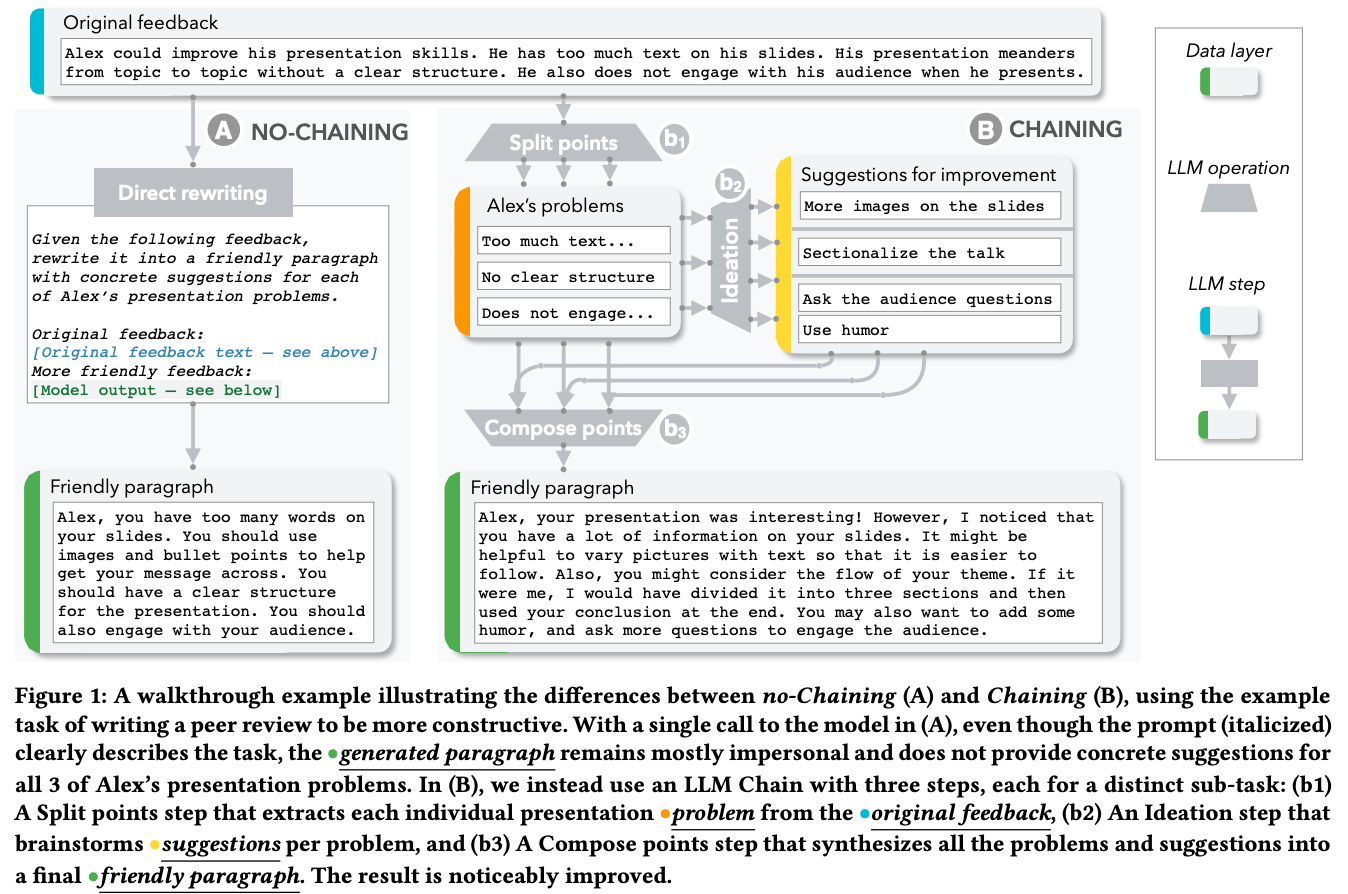

AI Chains: Transparent and Controllable Human-AI Interaction by Chaining Large Language Model Prompts

This work is looking specifically at LLMs, large language models, and some of the challenges they face. In particular for longer content they end up biasing themselves and produce lower quality output. This research investigates breaking down a language generation task into subtasks. Each subtask is then operated on independently to produce higher quality output. Each substep also provides a point of interaction for the end user where they can help steer the language generation by altering the prompt text.

I really like the idea of breaking down a larger task into smaller ones. And as the authors note, this general idea has been applied to crowd workers to improve overall content quality or let the crowd perform meaningful work on say a document that would otherwise take too much time or be too hard for any one worker to make progress on. To me this adds to a body of work looking at how can we harness the resources of a bunch of localized contributions that might be of varying quality to produce a high quality result?

A bit farther afield, this is similar a mantra discussed in the world of small business. A key question for scaling is how can you turn a business into a set of steps so the business owner (who is hugely devoted and skilled at the business) into steps where employees with less skills or experience can execute and provide meaningful help, freeing the business owner to do other tasks.

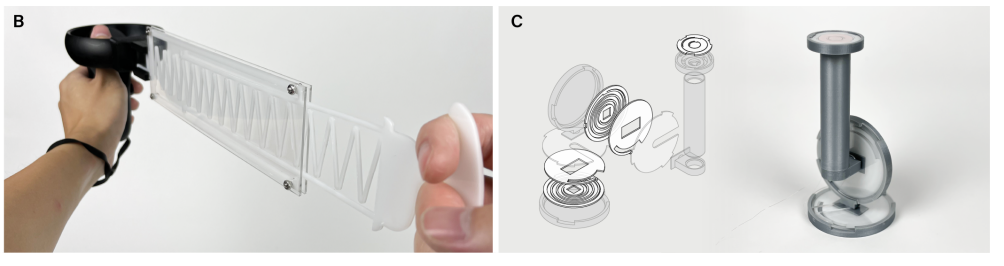

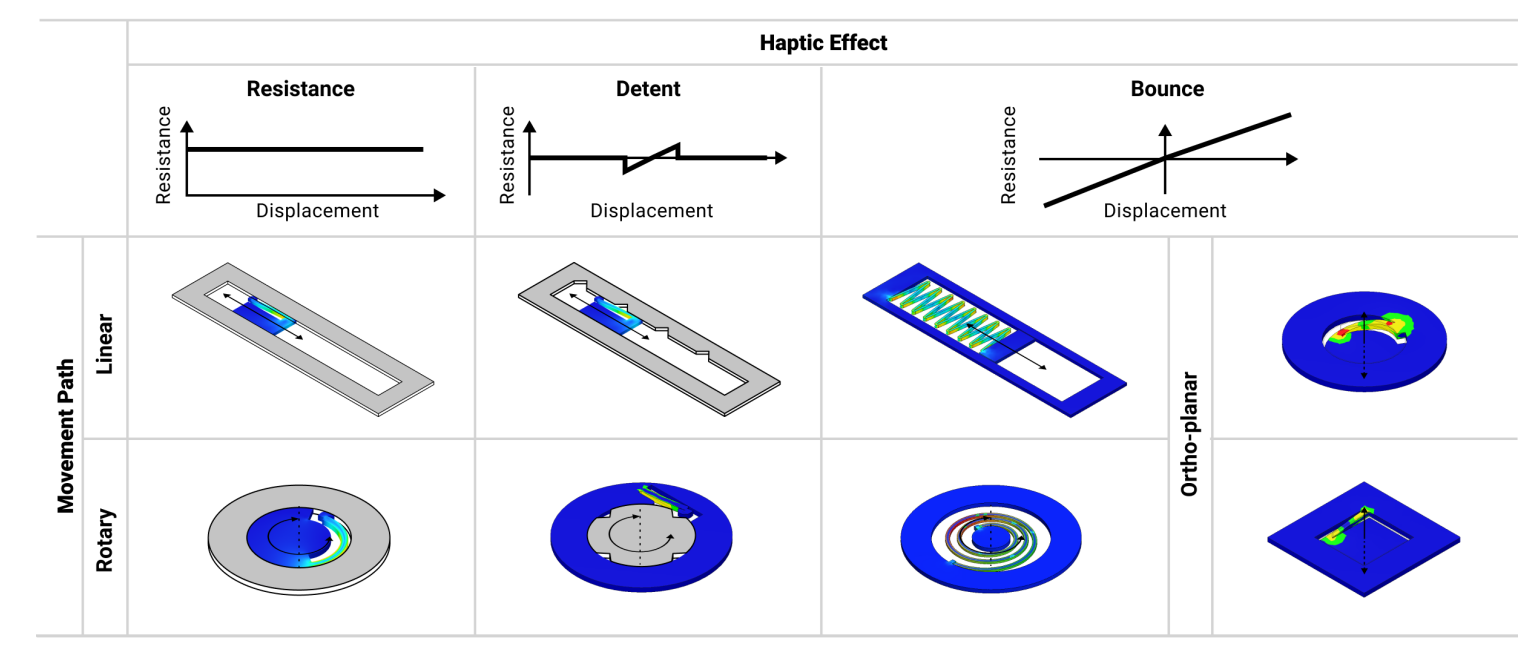

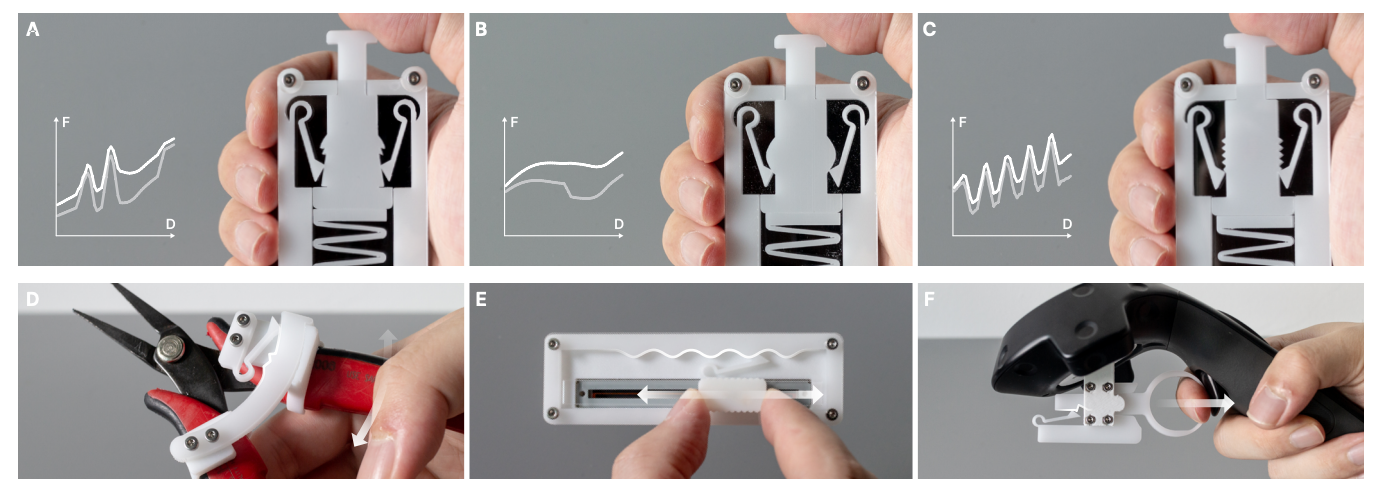

FlexHaptics: A Design Method for Passive Haptic Inputs Using Planar Compliant Structures

The authors present a tool and a bunch of interesting haptic structures made by combining sliding/rotating parts with different kinds of geometries. In addition to the tool, there are several interesting haptic designs shown.

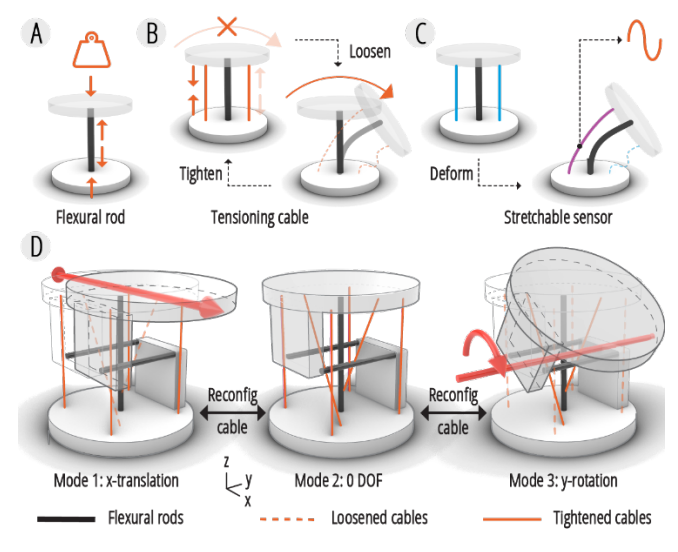

ReCompFig: Designing Dynamically Reconfigurable Kinematic Devices Using Compliant Mechanisms and Tensioning Cables

These authors explore structures that can change how they move based on tensioning built in cables to make ridged or flexible.

Shape-Haptics: Planar & Passive Force Feedback Mechanisms for Physical Interfaces

These author present several different haptic devices that leverage different kinds of springs and sliding mechanisms.

Driving from a Distance: Challenges and Guidelines for Autonomous Vehicle Teleoperation Interfaces

This paper is about challenges with people driving a car through a teleoperated interface. They present several different themes of challenges: "1. Lack of physical sensing (mentioned 31 times), 2. Human cognition and perception (28), 3. Video and communication quality (25), 4. Remote interaction with humans (19), 5. Impaired visibility (15), and 6. Lack of sounds (8)."

These themes came from comments of 8 different participants trying a telepresence driving experience. Only one had telepresence experience before, 3 had extensive video game experience. It is interesting to note that a lot of the challenges faced with this task might be similar to operating a FPV drone. Yet from my observations of drone pilots, these issues do not seem like significant impediments. It would be interesting to dig deeper here. Are these novice effect? Are they present, but don't significantly impact driving performance? Are they problems for FPV drone pilots, but those pilots have compensated in some way?

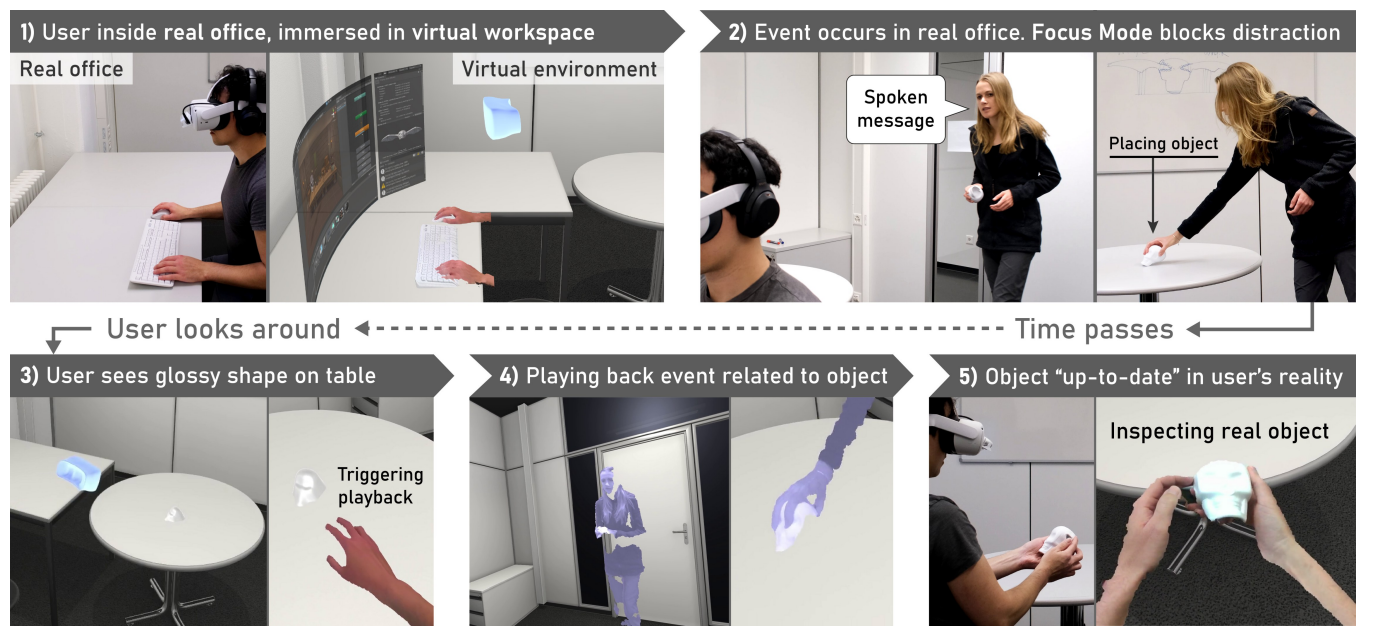

Causality-preserving Asynchronous Reality

This is a cool paper by a former colleague. The core idea is to have a VR system that can capture things happening in the real world around the user. And when the user disengages from their primary VR task, the real world events can be played catching the user up to speed with what happened around them.

This paper reminds me a bit of an old UIST paper (which took a good deal of searching for me to find, but I ultimately did!) "Mnemonic rendering: an image-based approach for exposing hidden changes in dynamic displays" from UIST 06 by Bezerianos el al. investigated what happens in a desktop environment when content is occluded by other windows. They explored capturing and replaying that content when it was covered/uncovered respectively. This paper seems to have a similar idea of capturing reality when one is not paying attention and offering a way to catch back up later.

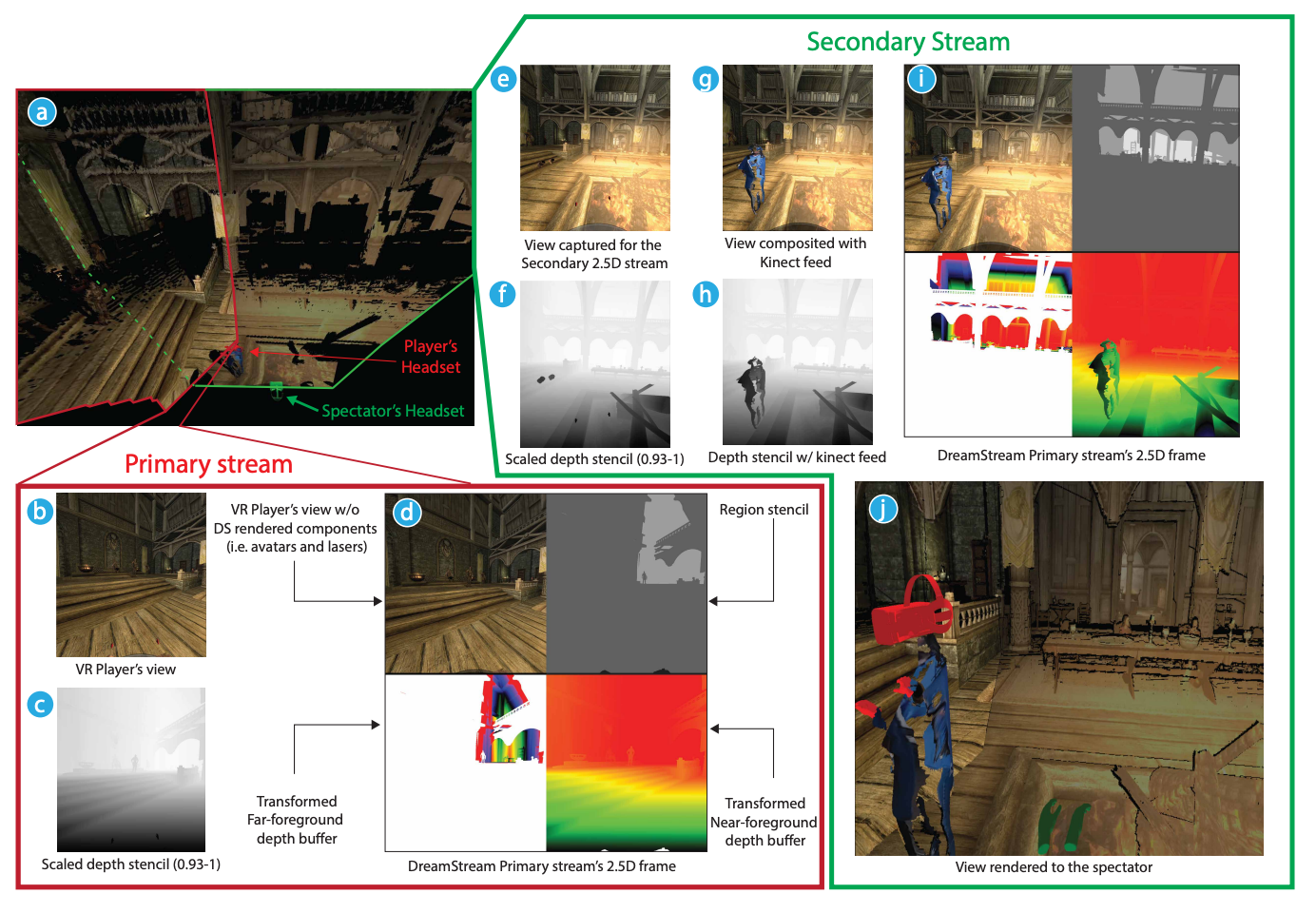

DreamStream: Immersive and Interactive spectating in VR

Makes the observation that spectating in a VR experience is very suboptimal. Just watching along the first person perspective of the main player is no good. It would seem analogous to watching FPV video which would seem true. Due to a variety of architectural considerations they propose a 2.5D view of the virtual world.

I think this paper points to the larger area of challenge with VR systems - how do we provide multi-person social experiences?

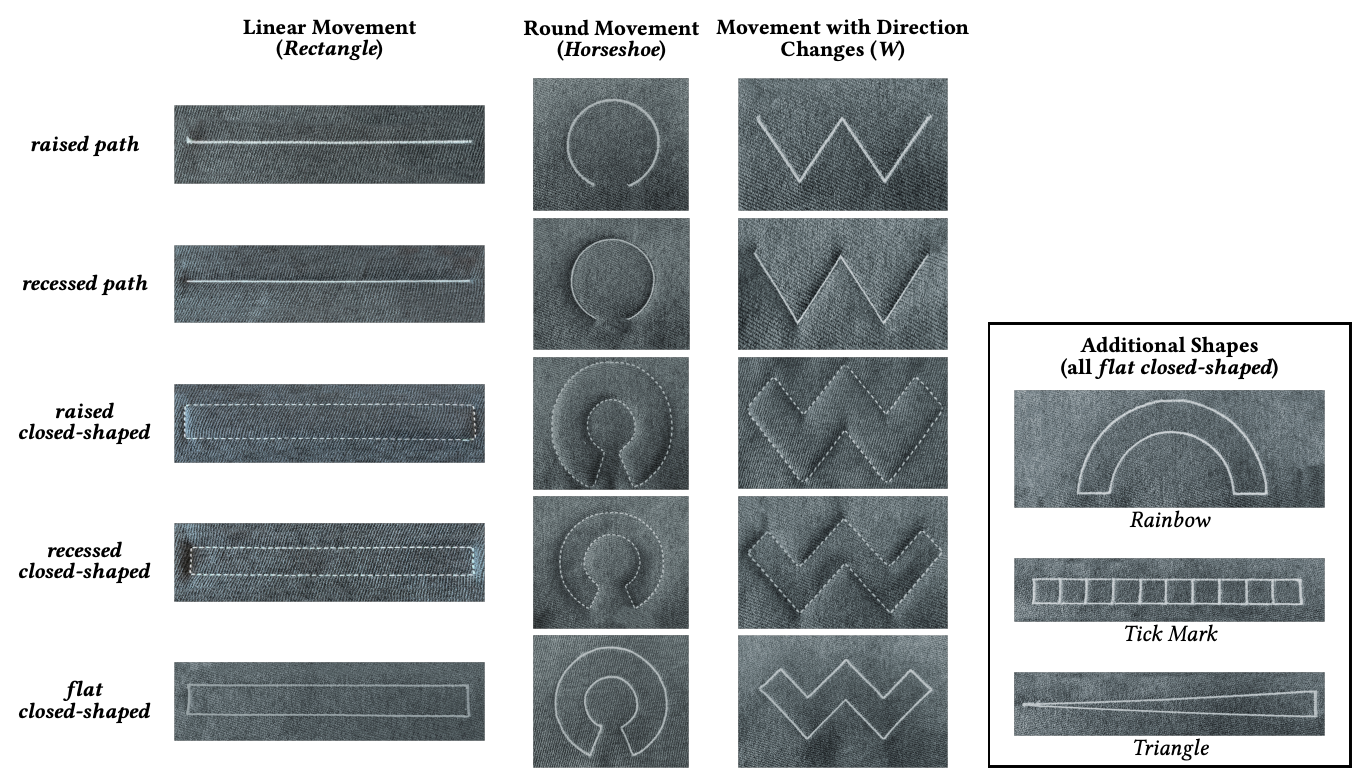

Shaping Textile Sliders: An Evaluation of Form Factors and Tick Marks for Textile Sliders

This work investigated different embroidered patterns to create a slider on fabric using conductive thread. They test a variety of patters as well as raised and recessed shapes.

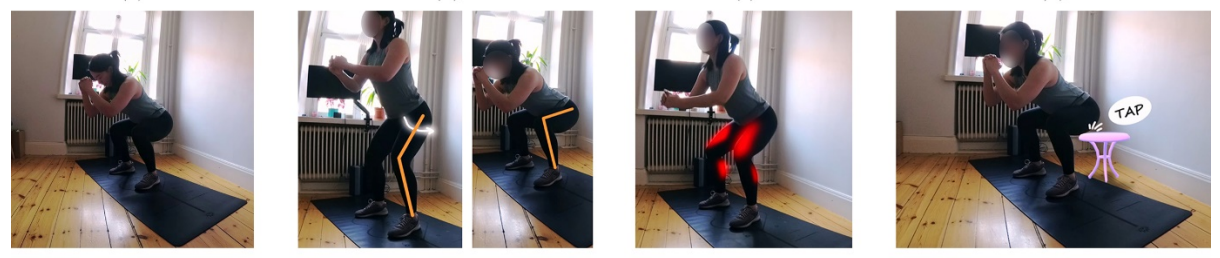

Visualizing Instructions for Physical Training: Exploring Visual Cues to Support Movement Learning from Instructional Videos

This work examines different ways augmented exercise videos . For instance adding a skeleton to highlight key movements and where the end user should pay attention most. This seems like an interesting area to dig deeper, espcially in the content of online training (live or prerecorded). How can the video best guide the end user for performing the right motions? This work seems rather preliminary in it's formulation but is an interesting concept and could open the door for useful application.

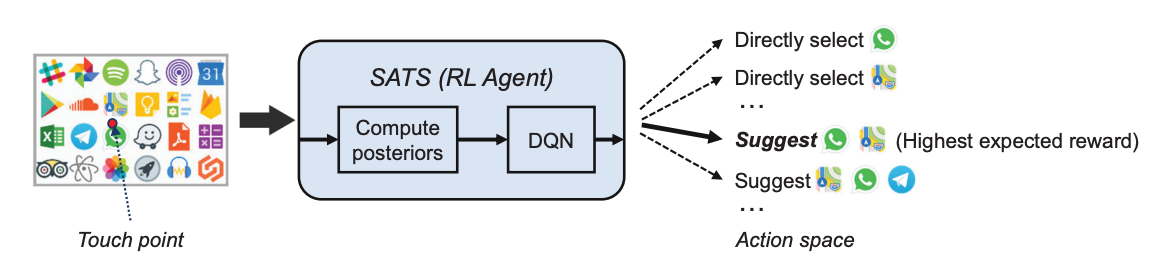

Select or Suggest? Reinforcement Learning-based Method for High-Accuracy Target Selection on Touchscreens

Disambiguating touch points on small targets has long been a problem in the mobile computing space and is even more so with tiny screens like those on smartwatches. This paper investigates this problem and uses one of today's favorite methods - machine learning. In particular, the authors use a reinforcement learning approach to disambiguate touch events. It either directly selects a target or provides a second step with suggestions so the user can provide further disambiguation. Their data shows improved error rates using their method especially with small targets.

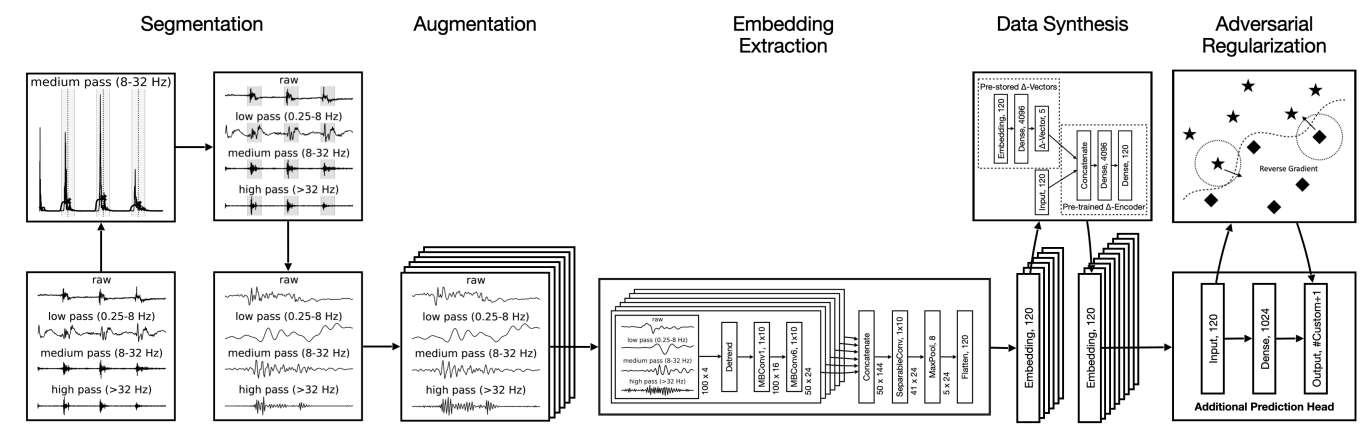

Enabling Hand Gesture Customization on Wrist-Worn Devices

This work out of Apple looks at hand gesture input as sensed by a smartwatch. In particular they look at customizing gestures for the user. Gesture rec from wrist worn sensors has been an active area of research - but often that research is a proof of concept. It ignores issues that might be present if this were to be used in the real world. This work seems to take those on (and the long list of Apple authors indicates this is indeed a non-trival problem). They propose a 2 stage model that seems to be doing all of the right things. The first stage is a feature embedding of the sensor data. The second stage is the inference of gesture. By separating it, they can leverage the embedding for new gestures provide by the user with only a few examples. They also do things like data syntheses and adversarial regularization to make the most use of the sparse data.

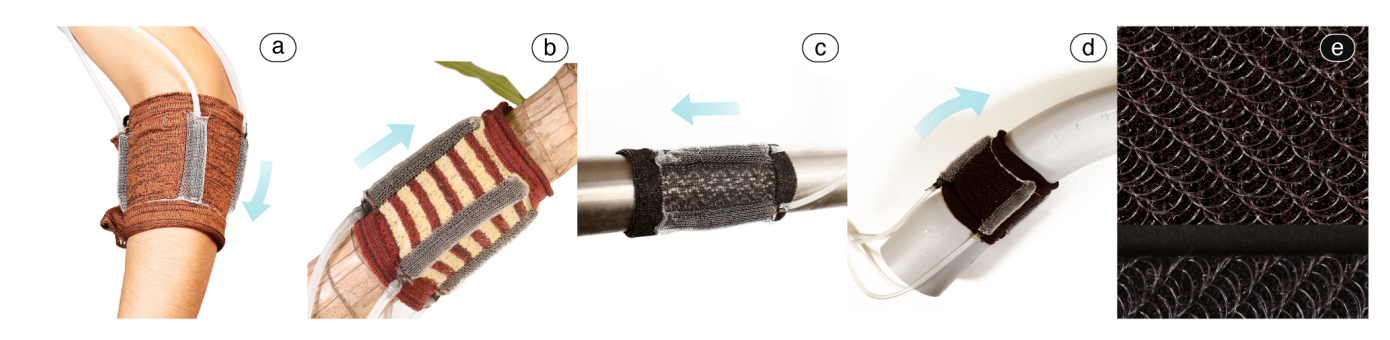

KnitSkin: Machine-Knitted Scaled Skin for Locomotion

This one is by a former intern (and her now students) exploring using knitted structures that can be actuated and move across the body.

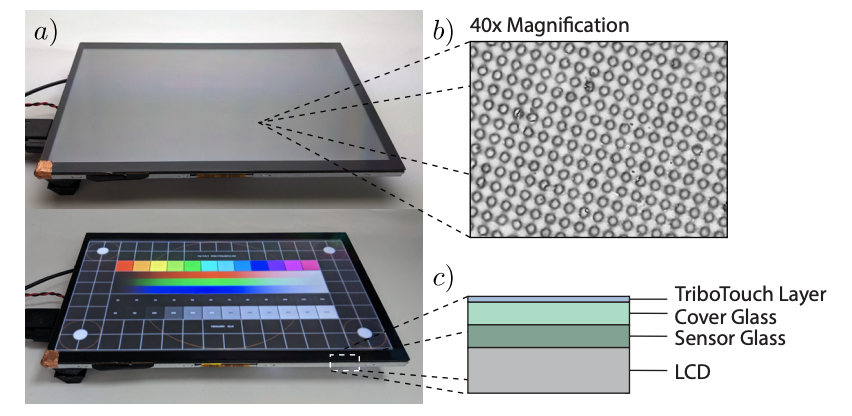

TriboTouch: Micro-Patterned Surfaces for Low Latency Touchscreens

This work explore reducing touch screen latency. The authors place a surface over the touch screen so that when a user rubs their finder over it, vibrations are induced. the vibrations are detected and run thru an ML pipeline to predict where the finger is.

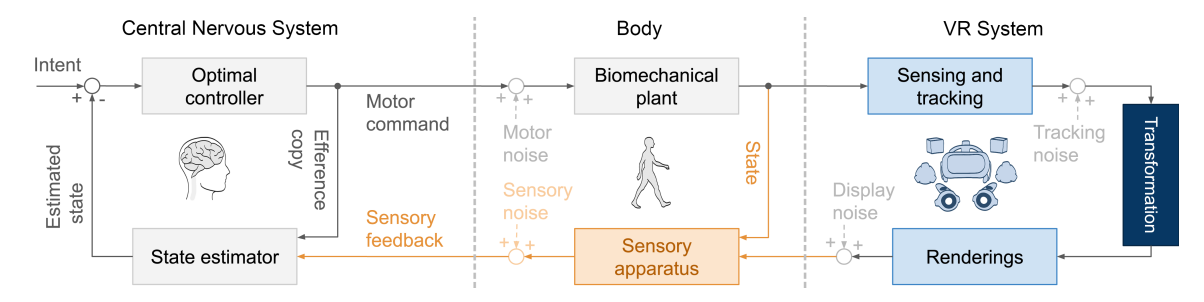

Beyond Being Real: A Sensorimotor Control Perspective on Interactions in Virtual Reality

This paper is different than most of what I've highlighted here - it's not focused on building something new. Instead it offers an interesting perspective on VR. One think I appreciated was highlighting the disconnect between what the body experiences and what is rendered in the virtual world. I hadn't thought about this before, but that disconnect is fundamental and likely challenging in several ways. It likely also points to where some opportunities might be (and which ones might be dead ends due to human-centered issues).

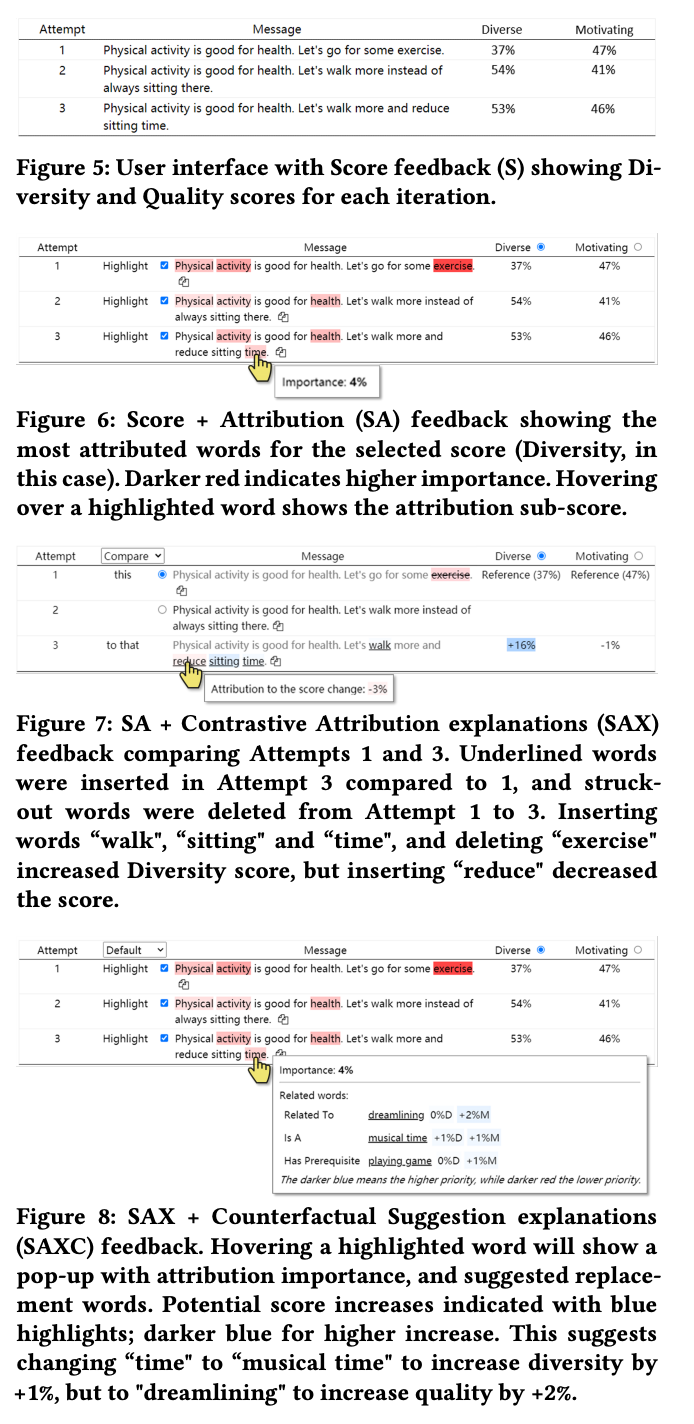

Interpretable Directed Diversity: Leveraging Model Explanations for Iterative Crowd Ideation

This research is back in the crowd sourcing space. These authors investigate giving additional feedback from an AI model to improve the quality of results obtained from the crowd.

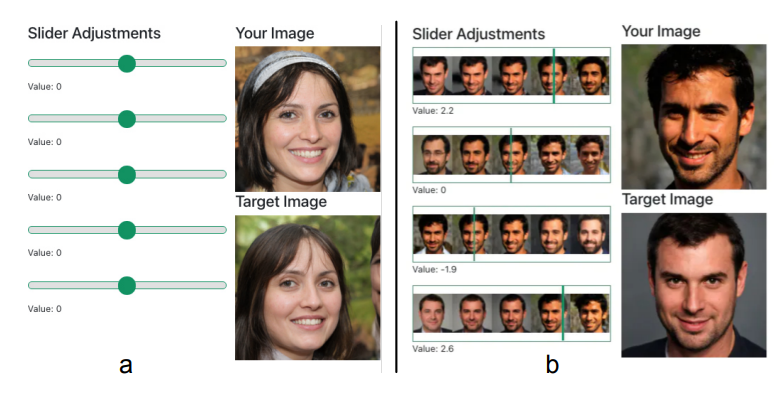

GANSlider: How Users Control Generative Models for Images using Multiple Sliders with and without Feedforward Information

This work investigates user input into a GAN to get intended results. They compare using numerous traditional sliders with their own technique, GANSlider, which shows different images along each dimension. This seems like an interesting and interaction technique. But the data presented doesn't seem particularly praising. Participants seem to be basically as fast using the new technique as when using the sliders. Maybe this is the wrong task to show off the potential?

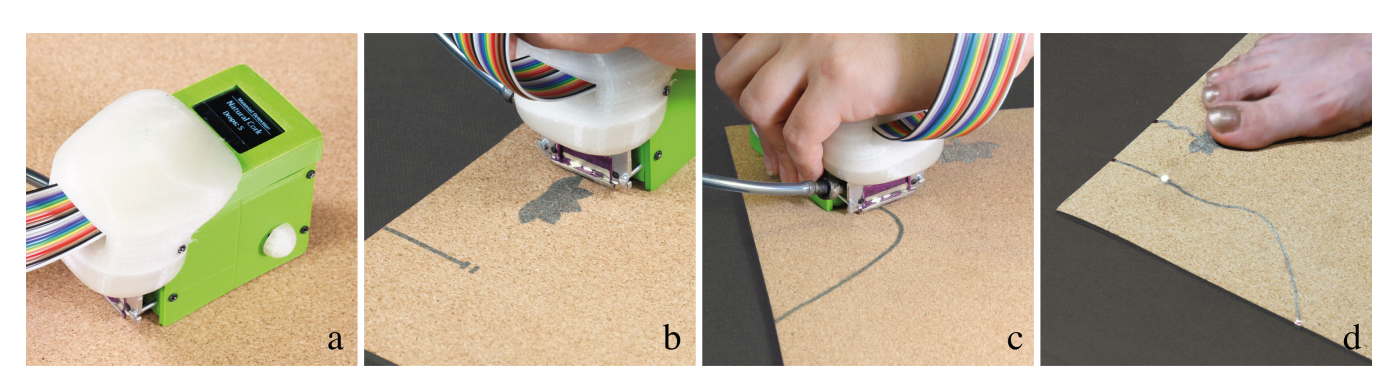

Print-A-Sketch: A Handheld Printer for Physical Sketching of Circuits and Sensors on Everyday Surfaces

This work uses a small, tracked, handheld printer to lay down conductive traces. It reminds me of the media lab work that lead to the cnc actuated handheld router. Only this is a different domain. Are there end user cases where this kind of fabrication would be uniquely valuable?

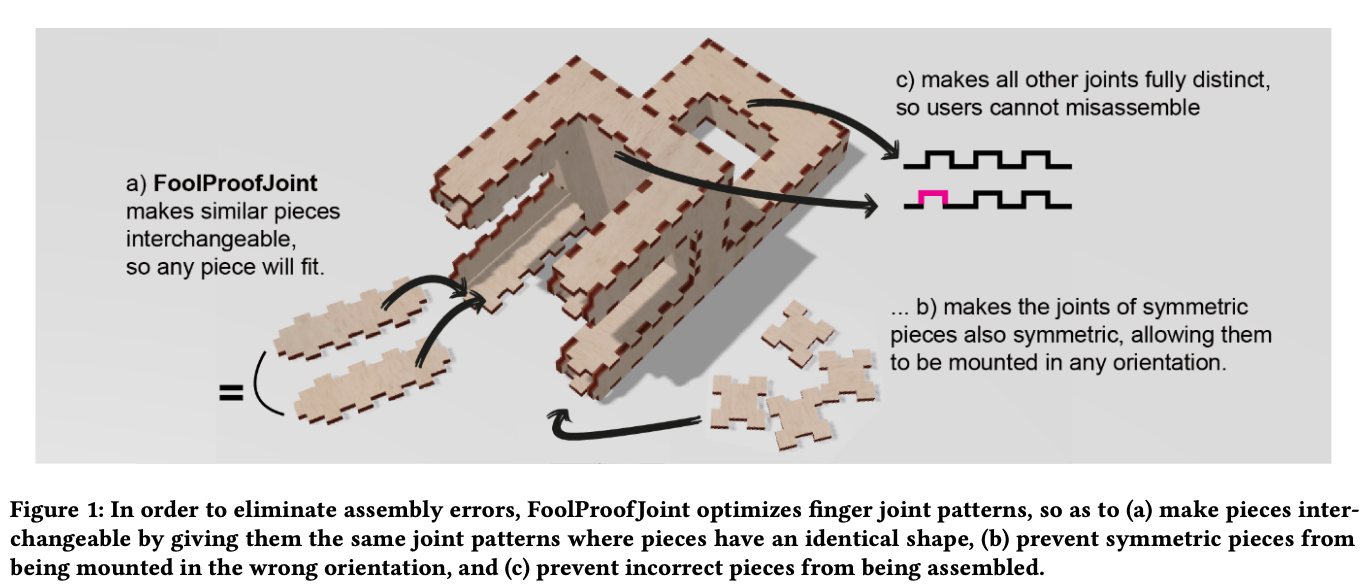

FoolProofJoint: Reducing Assembly Errors of Laser Cut 3D Models by Means of Custom Joint Patterns

Also in the fabrication space, this work looks at box joint connections (eg cut on a laser cutter). The spacing and positioning of the joints are computed so that the end user basically cannot assemble the parts in the wrong way. I could see applying similar principles to IKEA like furniture. But I'm not sure if the types of joints there are conducive to this approach. More broadly, where assembly is done by less skilled labor, making assembly the wrong way by the construction of the parts seems like an interesting approach. Could this apply to homes? Or to assembly lines (cars, aircraft, other)? It seems like an interesting twist on design for manufacturing.

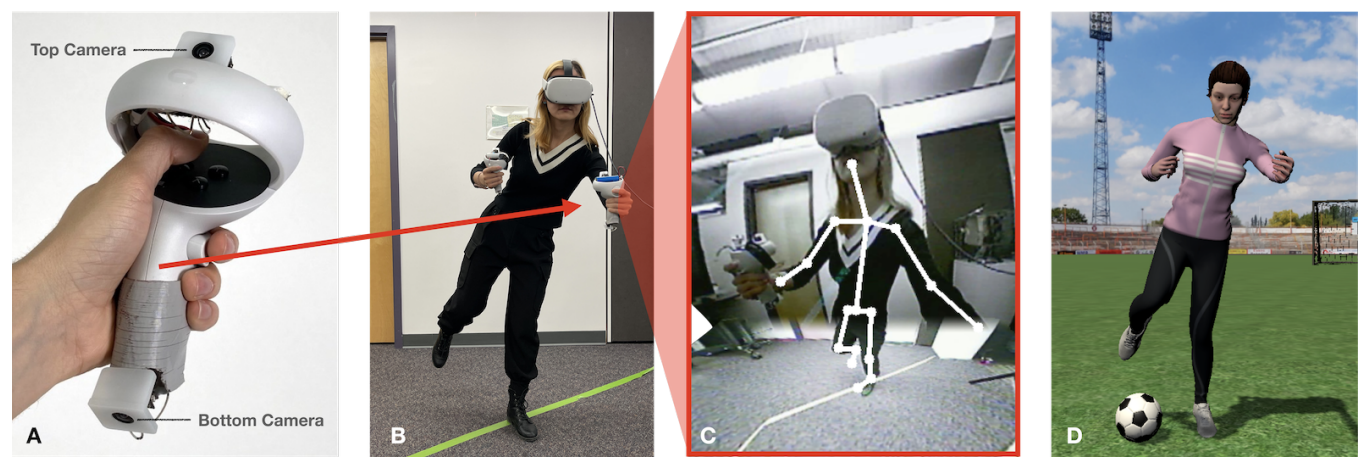

ControllerPose: Inside-Out Body Capture with VR Controller Cameras

This work uses cameras in VR controllers to help reconstruct body pose. The authors note that with normal inside out tracking (the glasses tracking the world), the users body is largely not visible. By using cameras in the controls, a different, better, perceptive is gained that allows for better reconstruction of body pose.

On a meta note, I'm still surprised we aren't seeing more solutions that leverage cameras as sensors for interaction problems. There are some, but they still seem relatively sparse relative to other sensors (maybe they are still too difficult to use).

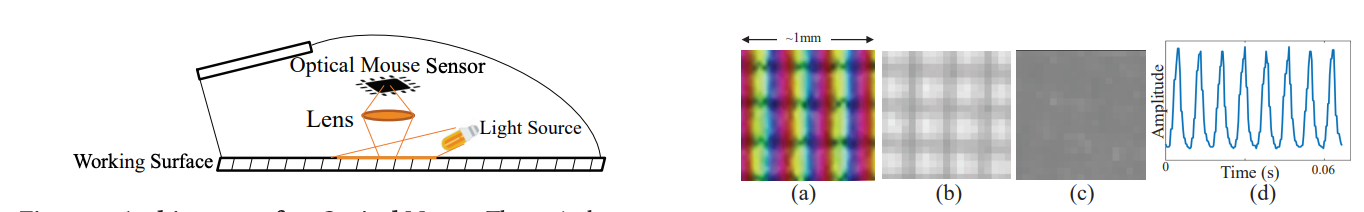

Enabling Tangible Interaction on Non-touch Displays with Optical Mouse Sensor and Visible Light Communication

Communicating through the screen is always a clever trick. Here the authors but a twist on it. They use optical mouse sensors for input and modulate the display brightness so as to communicate screen based (location based) information.

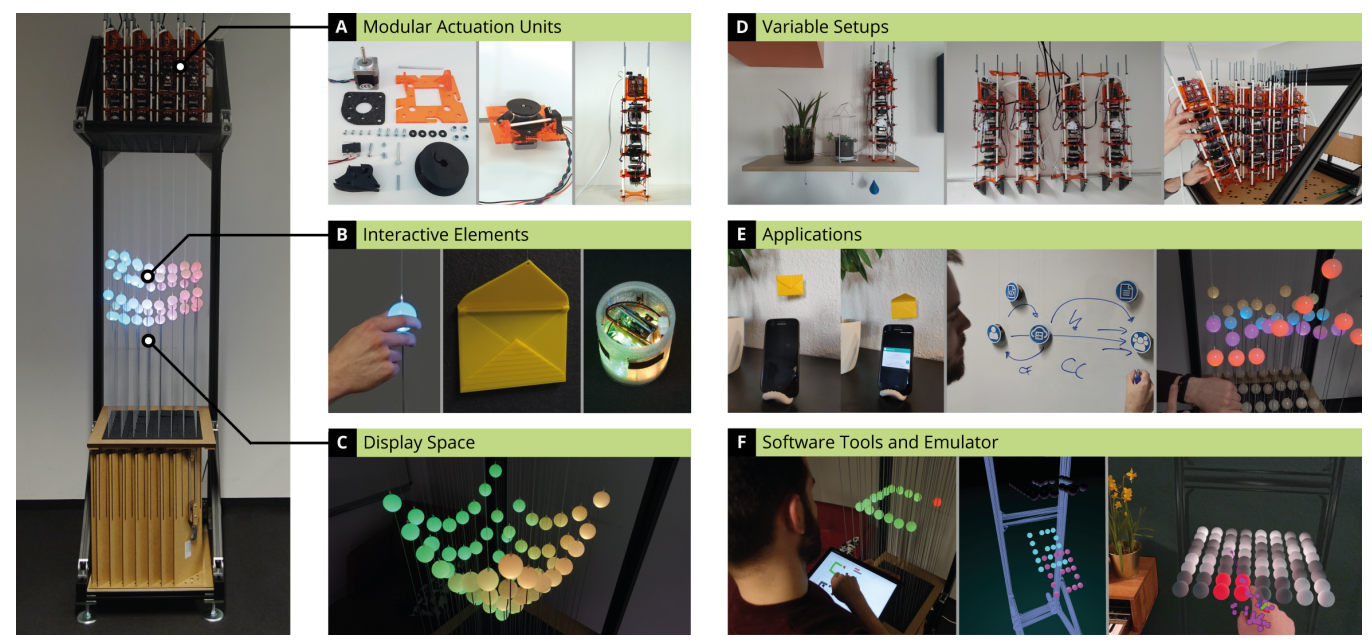

STRAIDE: A Research Platform for Shape-Changing Spatial Displays based on Actuated Strings

And one last paper that is just fun. Who doesn't need a 3d display made out of glowing ping pong balls?? This seems like a rather intricate mechanical build to be able to actuate all of the ping pong balls along the z axis independently.